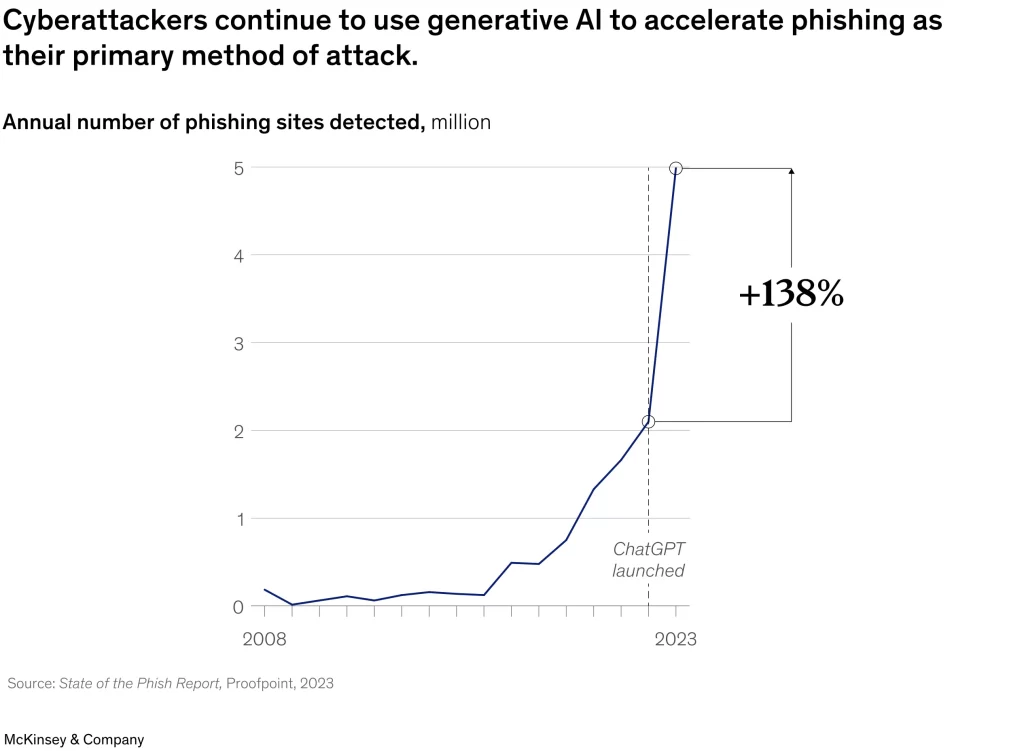

Ten years ago, cybersecurity focused on putting up walls: firewalls, antivirus software, and stringent regulations designed to keep attackers out. However, today, these walls are being challenged in a completely different way. AI-driven malware can now change its behaviour mid-attack, evade static scanners, and even manipulate the very AI systems meant to stop it. Traditional cybersecurity was never designed to face intelligent, self-adapting threats, creating a growing gap between how organisations defend themselves and how modern attacks actually operate.

This raises a critical question— what happens when malware learns faster than your security team? It is important to examine how AI is changing malware in order to comprehend why traditional cybersecurity is no longer keeping pace, which includes the shift towards behaviour-based defences, the launch of autonomous attacks, and new vulnerabilities such as data poisoning and insecure AI.

Current Preventive Tactics

Conventional cybersecurity systems and networks are based on tools such as firewalls, signature-based antivirus software, and static malware scanners. These tools identify dangers by comparing them to established patterns, signatures, and predefined criteria. While this strategy is effective against previously identified attacks, it assumes that threats remain relatively constant and predictable.

However, AI-driven attacks do not adhere to this approach. Modern malware can adapt its structure, behaviour, and execution in real time, making it easy to evade static detection systems. As a result of being based on historical data and rigid rules, they struggle to address emerging adaptive threats. Thus, defences designed for known dangers are becoming increasingly inadequate against attackers that are constantly learning and evolving.

Current Preventive Tactics

AI has changed malware from static to adaptive, self-modifying threats. AI-driven malware differs from regular malware, which is developed once and then distributed several times. AI-based malware can change or rewrite sections of its own code while an attack is underway. This allows it to alter its structure, encryption, or execution paths in view of security restrictions, thus giving every instance of the malware a different look. Techniques based on polymorphic and metamorphic malware allow it to escape from static scanners and signature-based detection systems that rely on the detection of known code patterns.

Cybercriminals are increasingly coming up with more sophisticated types of malware. AI-powered malware may, moreover, adjust its behaviour to suit the environment which it encounters. In doing so, it will emphasise stealth over speed when it is appropriate to do so. This means that malware is no longer a fixed programme, but is beginning to act as if it is a thinking adversary that reacts to defence rules.

Cybersecurity’s progressive defence

As attacks driven by AI become more and more challenging to detect with signature-based defences, cybersecurity is moving away from perimeter-focused security towards behaviour-based detection. User and Entity Behaviour Analytics (UEBA) deals with this transition by observing how users, devices, and systems interact over time. Unlike signatures that are known to be harmful, UEBA creates a baseline of normal activity and flags any deviations that may be an attack.

These types of attacks can be very effective against the AI-powered threats, which appear formally proper at the coding level but behave strangely once inside a system. By examining anomalies like unusual login hours, data access, or system interactions, UEBA helps recognise previously undetected threats. These days, it’s not about knowing what software is running, but rather what is acting strangely, that effective defence boils down to.

Agentic AI

Agentic AI refers to those systems that can function independently as autonomous agents, plan, act, and adapt to achieve specific goals without continuous human guidance. In cybersecurity, more and more attackers are employing agentic AI for the automated end-to-end execution of an attack chain from initial reconnaissance to exploitation and lateral movement within networks. These systems can scan for weaknesses, choose the best attack route, and adapt their strategies in real time when they encounter an attack.

This level of mobility and autonomy allows the attackers to carry out an attack at machine speed, which is much faster than traditional, human-led defence processes. Non-automated, human-centric security operations relying on alerts, manual analysis, and response workflows cannot keep up with such rapid, adaptive threats. Consequently, conventional defences usually react to significant damage and come into place only after significant damage has already occurred.

The fragile structure

Criminals are targeting AI as attackers notice organisations are heavily relying on it for making key decisions and detecting threats. Generative data poisoning refers to when adversaries manipulate the outcome of an enterprise AI system by injecting misleading or malicious data into it. The poisoned data will result in the models being taught to classify harmful activity as legitimate over time, thereby training them to disregard certain attacks. This type of attack is largely undetected by conventional security tools since no malicious code is executed and no hush-hush intrusion happens. Firewalls and antivirus programs are not meant to identify corrupt learning processes or biased model behaviour. An unfortunate outcome is that a “trusted” AI system stays up and running while making the wrong or dangerous decisions about security.

Conclusion

As artificial intelligence becomes a means of defence as well as a target, companies have to deploy AISPM— Artificial Intelligence Security Posture Management— to safeguard their AI ecosystems. The AISPM continuously monitors data integrity, model behaviour, permission access, and model drift to ensure that the AI systems work as intended and are not being tampered with. Instead of viewing AI as a static implementation, it should be treated as a vital attack surface demanding continuous governance and supervision.

Cybersecurity is no longer a man versus man scenario, but it has evolved into an AI against AI situation due to the changing times. Even though traditional security resources remain significantly important, they are not enough on their own anymore. Organisations must go beyond static defences and pursue longer-term, intensifying efforts at the speed of the machine.

Written by – Devangee Kedia

The post Rethinking Cybersecurity for AI-Driven Threats appeared first on The Economic Transcript.