The moment so many have feared and warned about is here: U.S. forces on the ground near Gaza are keeping the peace between the two sides, who have been doing their best to kill each other for decades.

One wrong move — one misunderstanding or miscalculation — could easily lead to renewed bloodshed. But this time, it could be the blood of our sons and daughters giving their last full measure of devotion in a conflict far from home.

It is a reality and a risk to help end this latest chapter of the Israeli-Palestinian conflict, which has caused so much suffering on both sides. There may be no alternative to putting our military in harm’s way today, but there could be and must be one in the future, and we need to embrace and pursue it as soon as possible.

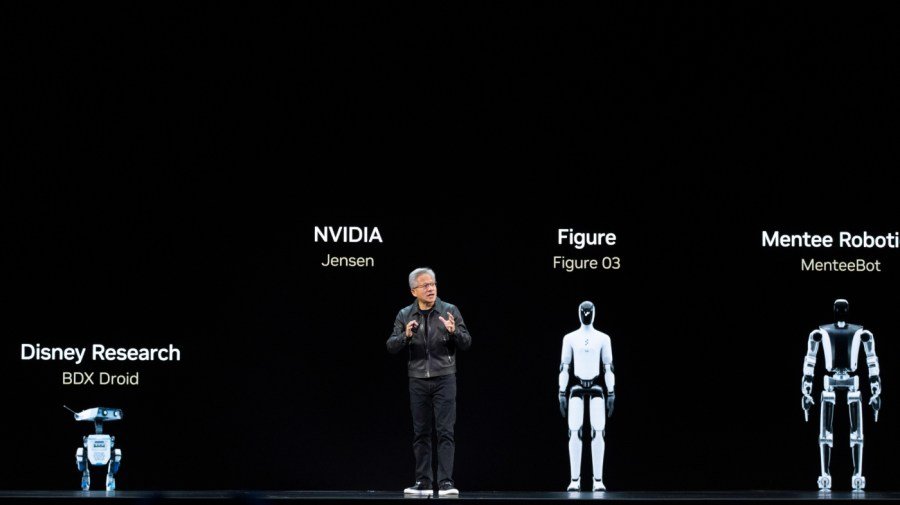

So, what if we could protect both civilians and our military by sending something else in their place — humanoid drones?

We know how that sounds, but let us explain. These humanoids would be powered by interoperable, agentic artificial intelligence. Maybe it’s the stuff of science fiction, but it’s no longer the purview of just screenwriters.

We now have the technology to deploy such robots in war zones to protect populations, establish communications and stabilize what’s happening on the ground. These systems could serve as guardians defending humanitarian corridors and deterring violence without firing a shot.

We are entering a new era in which artificial intelligence becomes a civic institution rather than merely a commercial or military tool. The question is whether we lead the world in building ethical, interoperable systems that protect people, or leave the future to regimes with nefarious intentions.

Let’s be clear: Our adversaries are already using this technology — not to save lives but to take them. Russia uses drone swarms to attack Ukraine’s infrastructure. China integrates AI into its naval and surveillance systems and shares it with governments, using it for population control.

Meanwhile, we and our allies hesitate. Our doctrines remain anchored to an era when deterrence meant soldiers and sanctions.

The new challenge isn’t our ability to get our military to the next hot spot. It’s machine coordination. The balance of power around the world is about to be determined by our approach to AI governance.

From Ukraine to Gaza, the international community faces the same challenge: civilians stranded between opposing forces, and humanitarian agencies unable to provide aid. Yet, technology already exists to change that. Autonomous systems could patrol ceasefire zones, protect evacuation routes, deliver aid and detect incoming threats, creating buffer zones where humans can safely operate.

This isn’t fantasy. It’s a moral and strategic imperative. Western democracies must find ways to deter aggression without risking American lives.

The foundation for this future is what we call Omni Cities — democratic, interoperable infrastructures where civic AI integrates across transportation, energy, health and defense systems. It’s not about surveillance, but about saving lives and protecting the peacemaker. It doesn’t control; it coordinates. It is is the civic answer to authoritarian implementations of AI.

The U.S. needs to lead this effort and set standards for interoperability, data ethics, and autonomous deterrence. Under United Nations oversight, AI-enabled drones and humanoid guardians could be deployed to regions such as Gaza to secure humanitarian corridors and enforce ceasefires.

They would act not as instruments of war, but as intermediaries for peace — enforcing accountability through presence, not violence.

Such a framework would extend beyond conflict zones. Domestic applications could include rapid disaster response to floods, wildfires or hurricanes, where AI could coordinate public infrastructure and autonomous assets to save lives.

If the nuclear age was about avoiding mutual assured destruction, the civic AI era must be about preserving life.

Public perception is understandably based on what we’ve seen in movies like “The Terminator.” Skeptics warn machines lack human morality and autonomy risks AI becoming more powerful than our ability to control it. Those concerns are legitimate, but inaction carries its own risk.

Failing to define the ethics of AI deployment leaves it to authoritarian regimes that already use this technology to repress. The challenge isn’t whether to use AI but ensuring that it serves us.

The new “Space Race” isn’t a contest between capitalism and communism, but rather between authoritarian AI and civic AI. The nations leading in building interoperable, ethical systems will shape the moral frontier of technology.

So far, President Trump has rejected calls to cooperate in that venture with international partners. But the time has come for the U.S. and its allies to build Omni Cities and deploy these guardians of peace, not to replace humans, but to protect them.

The civic AI Era is emerging. The question is whether we will lead it or be led by it.

Cesar R. Hernandez is an Oxford MBA and Equity Fellow at the Harvard Kennedy School, where he studies the intersection of AI, national security, and civic resilience. He is also a developer at MIT’s Media Lab AI Venture Studio.

Alan M. Cohn is a Peabody and Emmy Award-winning journalist.