Google is indexing conversations with ChatGPT that users have sent to friends, families, or colleagues—turning private exchanges intended for small groups into search results visible to millions.

A basic Google site search using part of the link created when someone proactively clicks “Share” on ChatGPT can uncover conversations where people reveal deeply personal details, including struggles with addiction, experiences of physical abuse, or serious mental health issues—sometimes even fears that AI models are spying on them. While the users’ identities aren’t shown by ChatGPT, some potentially identify themselves by sharing highly specific personal information during the chats.

A user might click “Share” to send their conversation to a close friend over WhatsApp or to save the URL for future reference. It’s unlikely they would expect that doing so could make it appear in Google search results, accessible to anyone. It’s unclear whether those affected realize their conversations with the bot are now publicly accessible after they click the Share button, presumably thinking they’re doing so to a small audience.

Nearly 4,500 conversations come up in results for the Google site search, though many don’t include personal details or identifying information. This is likely not the full count, as Google may not index all conversations. (Because of the personal nature of the conversations, some of which divulge highly personal information including users’ names, locations, and personal circumstances, Fast Company is choosing not to link to, or describe in significant detail, the conversations with the chatbot.)

The finding is particularly concerning given that nearly half of Americans in a survey say they’ve used large language models for psychological support in the last year. Three-quarters of respondends sought help with anxiety, two in three looked for advice on personal issues, and nearly six in 10 wanted help with depression. But unlike the conversations between you and your real-life therapist, transcripts of conversations with the likes of ChatGPT can turn up in a simple Google search.

Google indexes any content available on the open web and site owners are able to remove pages from search results. ChatGPT’s shared links are not intended to appear in search by default and must be manually made discoverable by users, who are also warned not to share sensitive information and can delete shared links at any time. (Both Google and OpenAI declined Fast Company’s requests for comment.)

One user described in detail their sex life and unhappiness living in a foreign country, claiming they were suffering from post-traumatic stress disorder (PTSD) and seeking support. They went into precise details about their family history and interpersonal relationships with friends and family members.

Another conversation discusses the prevalence of psychopathic behaviors in children and at what age they can show, while another user discloses they are a survivor of psychological programming and are looking to deprogram themselves to mitigate the trauma they felt.

“I’m just shocked,” says Carissa Veliz, an AI ethicist at the University of Oxford. “As a privacy scholar, I’m very aware that that data is not private, but of course, ‘not private’ can mean many things, and that Google is logging in these extremely sensitive conversations is just astonishing.”

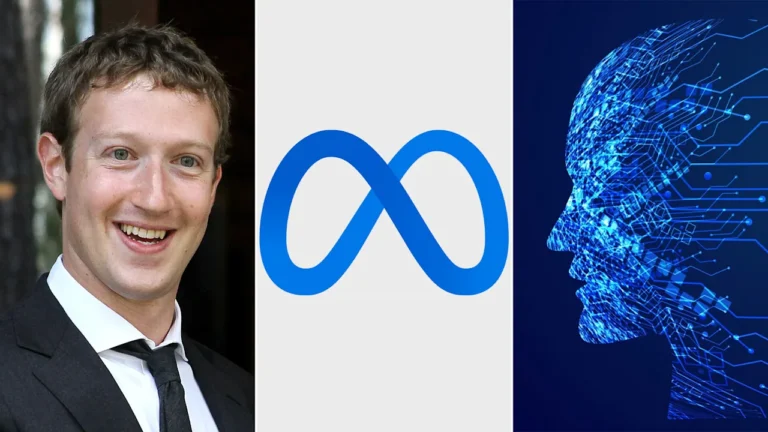

Similar concerns have been raised with competing chatbots, including those run by Meta, which began sharing user queries with its AI systems in a public feed visible within its AI apps. Critics then said user literacy was not high enough to recognize the dangers of sharing private information publicly—something that later proved to be correct as personal details surfaced on the social feed. At the time, online safety experts highlighted worries about the disparity between how users think app functionalities work, and how the companies running the apps actually make them work.

Veliz fears that this is an indication of the approach we’re going to see big tech taking when it comes to privacy. “It’s also further confirmation that this company, OpenAI, is not trustworthy, that they don’t take privacy seriously, no matter what they say,” she says. “What matters is what they do.”

OpenAI CEO Sam Altman warned earlier this month that users shouldn’t share their most personal details with ChatGPT because OpenAI “could be required to produce that” if compelled legally to do so by a court. “I think that’s very screwed up”, he added. The conversation, with podcaster Theo Von, didn’t discuss users’ conversations being willingly opened up for indexing by OpenAI.

“People expect they can use tools like ChatGPT completely privately,” says Rachel Tobac, a cybersecurity analyst and CEO of SocialProof Security, “but the reality is that many users aren’t fully grasping that these platforms have features that could unintentionally leak their most private questions, stories, and fears.”