With all the news in the quantum world this month—including DARPA’s new list of the most viable quantum companies, and Quantinuum’s announcement of “the most accurate quantum computer in the world“—IBM, not to be outdone, put out a statement of its own. The top-line message: We’re doing great!

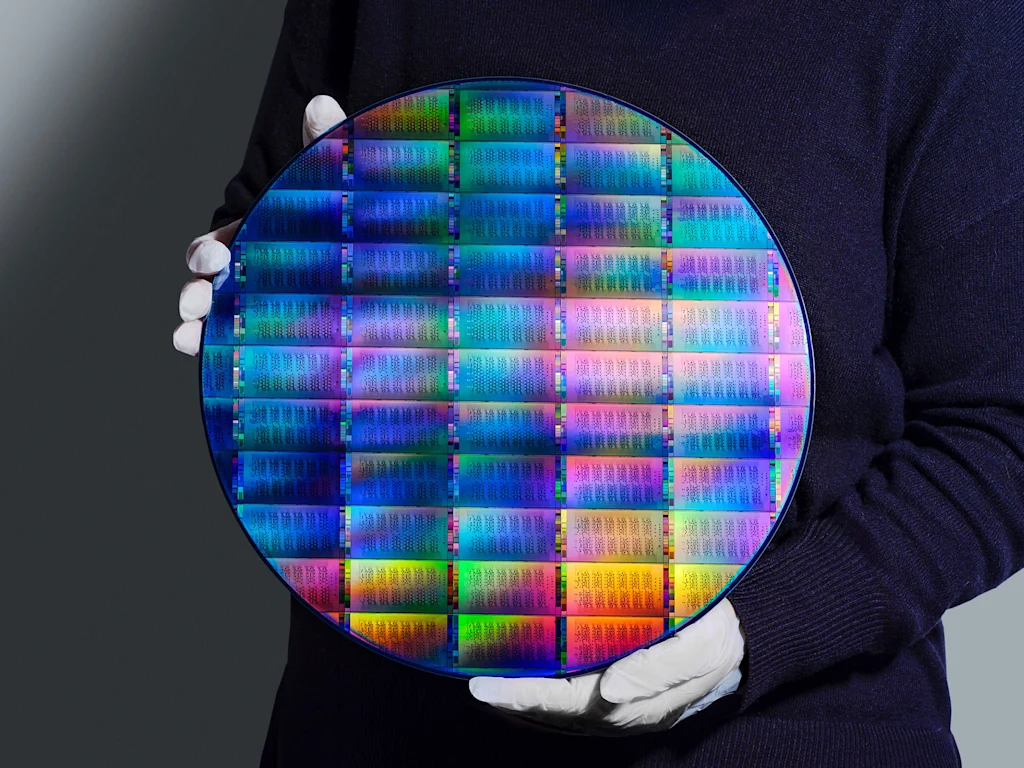

IBM’s quantum program is hitting all the milestones it’s set out in its most recent road map—and it is accelerating progress toward a large-scale, fault-tolerant quantum computer, by shifting production of its quantum processors out of its research labs to an 300mm quantum advanced 300mm wafer fabrication facility at the Albany NanoTech Complex. The move will double the speed at which IBM can produce quantum processors, and enable a tenfold increase in their physical complexity.

The company also announced two new processors, which IBM Fellow and Director of Quantum Systems Jerry Chow told me, on a recent tour of the company’s lab in Yorktown Heights, represent the company’s two-pronged path moving forward.

The IBM Quantum Nighthawk processor, which allows more complex computations with the same low error rates as its predecessor, is built for near-term “quantum advantage”—applications that show an edge over classical (non-quantum) computing approaches alone.

By combining high-powered computing (HPC) with quantum processors, IBM believes that researchers will show verifiable examples of quantum advantage in 2026. The company has joined with Algorithmiq, Flatiron Institute, and BlueQubit to create an open, community-led “quantum advantage tracker” to systematically monitor and verify emerging demonstrations of advantage.

The company’s experimental IBM Quantum Loon processor, on the other hand, is a step toward the company’s vision of large-scale “fault-tolerant” quantum computing, which it aims to realize by 2029. The Loon chip demonstrates a new architecture capable of implementing and scaling all the components needed for practical, high-efficiency quantum error correction.

A year ahead of schedule, IBM also showed that using classical computing hardware, it could accurately decode errors in real-time, relying on efficient qLDPC (quantum low-density parity check) codes.

“You can’t just wait for fault-tolerance,” says Chow. “Even when you get to those machines, you’re going to look at, how do you integrate with the classical side? How do you actually build all the tools and the libraries, all the software pieces [or applications]?”

“You can already to start to build that with the machines today,” Chow continues. “They’re going to be at a different scale and more heuristic [trial and error] in nature. But it’s better to get on board than to just wait and have it show up on your doorstep and not know what to do with it.”